LLMs are reaching a point of maturity where they are sufficiently capable of powering sophisticated AI agents. The agents they power are not free-range, pseudo-AGI-like agents, but rather vertical-specific agents addressing a specific use case, with a specific focus. Examples of these AI agents launched in the past year include Replit's coding agent, Elastic's security assistant, and Uber's developer productivity platform.

A significant portion of these agents rely on large, closed-source models. This approach introduces several challenges when deploying agents within an enterprise environment. These challenges include substantial inference costs, significant latency impacting real-time performance, and concerns about data privacy and compliance, especially when dealing with sensitive information.

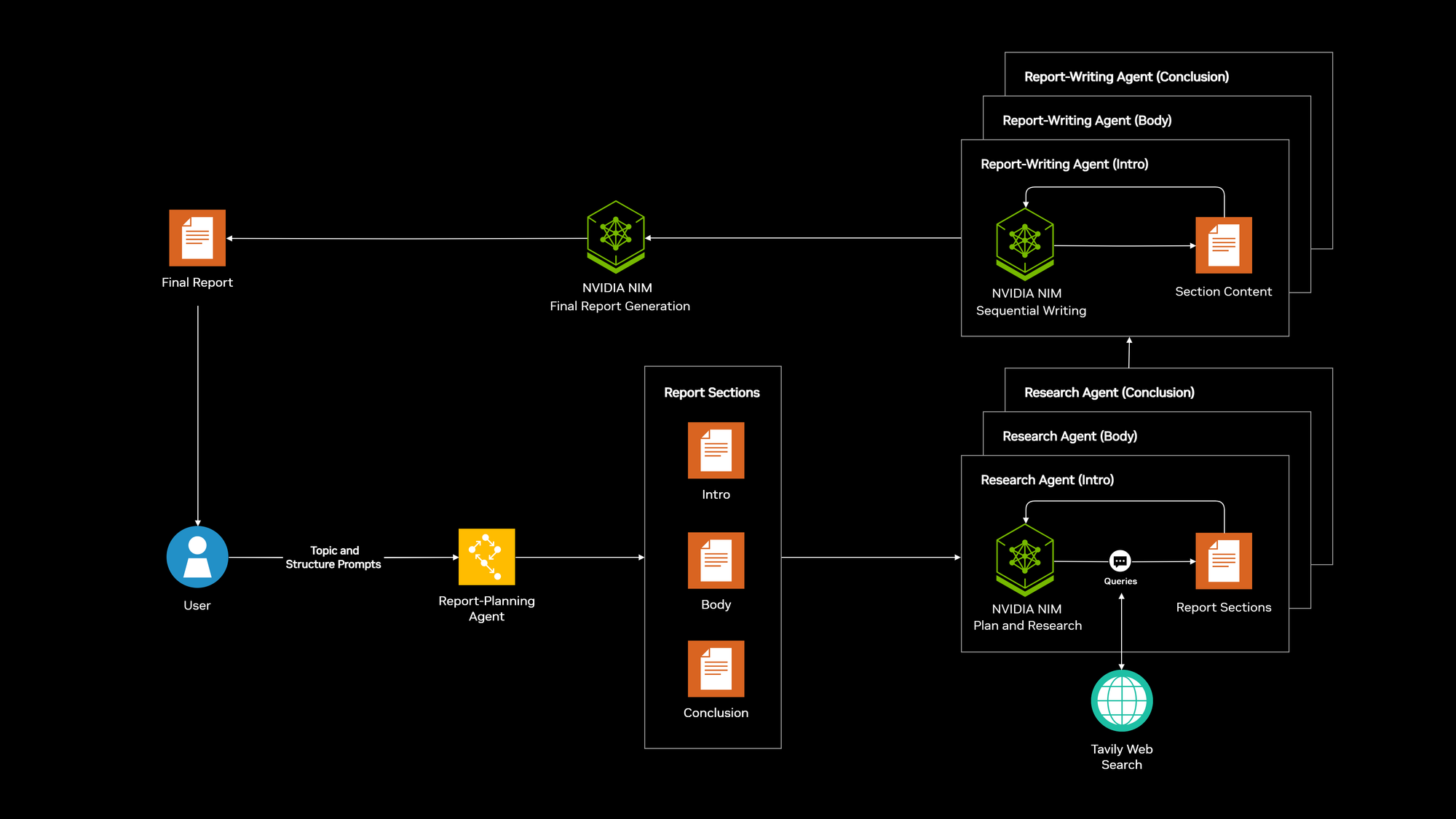

NVIDIA NIM microservices, part of NVIDIA AI Enterprise, helps address these issues. We are excited to partner with NVIDIA and release a new technical blueprint for AI agents, known as Structured Report Generation. Using LangGraph, the blueprint allows users to define a topic and specify an outline to guide the agent in searching the web for relevant information, so it can return a report in the requested structured format.

“LangChain's AI blueprint for structured report generation built on NVIDIA AI Enterprise and NVIDIA NIM microservices empowers businesses to create customized, high-performance AI agents that not only address key challenges in deployment and security but also harness the full potential of open-source LLMs for transformative business outcomes,” said Justin Boitano, vice president of Enterprise AI Software Products at NVIDIA.

Key Links:

Technical Stack

The blueprint is built on the LangGraph and the NVIDIA AI Enterprise platform, and is comprised of the following:

* NVIDIA NIM: Provides a portable, secure platform for running optimized models anywhere–in the cloud, datacenter or workstations, offering cost-effectiveness, high throughput, and low latency.

* Open models, including Mistral AI and Meta Llama: Allows for greater control and customization, as well as helps address data privacy concerns, which are supported by NVIDIA NIM with day-0 support.

* LangGraph: Enables the construction of complex agent workflows with fine-grained control over the cognitive architecture, making it possible to build performant agents with smaller models.

* LangGraph Platform: Facilitates the deployment of agents, with the option to run on-premise for enhanced security and control.

* LangSmith: Provides comprehensive monitoring and testing capabilities, also deployable on-premise, allowing for rigorous evaluation and debugging of agents.

Detailed Breakdown

By leveraging the community models supported by NIM microservices, we are enabling enterprises to deploy their AI agents anywhere, on cloud or data center, reducing reliance on 3rd-party-hosted inference and significantly cutting costs. The optimized nature of NIM microservices contributes to significantly faster inference times.

Open models supported by NVIDIA NIM, such as Mistral AI models or Meta’s Llama family of models, allow for greater transparency and customization. This is crucial for enterprises that require full control over their data and model behavior. Open-source models also allow for fine-tuning to specific tasks, further improving performance and reducing costs.

LangGraph provides a structured framework for building complex, multi-step agent workflows. It allows developers to define the cognitive architecture of their agents, enabling them to build complex reasoning and decision-making processes. This level of control is essential for building agents that can perform complex tasks with smaller, more efficient models.

The LangGraph platform simplifies the deployment of agents. Its ability to also run on premises ensures that sensitive data remains within the organization's infrastructure, helping address key data privacy concerns.

LangSmith provides the necessary tools for monitoring and testing agents, allowing teams to track performance, identify bottlenecks, and ensure the reliability of their agents. The on-premises deployment option allows for complete control over the data used for testing and monitoring.

Conclusion

The AI Blueprint for structured report generation co-created by LangChain and NVIDIA will help organizations unlock the full potential of AI agents. This powerful combination enables the development of secure, cost-effective, and high-performing agents tailored to specific enterprise needs, moving beyond the limitations of closed-source solutions. Embrace the future of AI with a solution that prioritizes control, customization, and performance.

Key Links: