Editor's Note: This post is written by Dexter Storey, Sarim Malik, and Ted Spare from the Rubric Labs team.

Important Links

Goal

The purpose of this guide is to explain the underlying tech and logic used to deploy a scheduling agent, Cal.ai, in production using LangChain.

Context

Recently, our team at Rubric Labs had the opportunity to build Cal.com's AI-driven email assistant. For context, we’re a team of builders who love to tackle challenging engineering problems.

The vision for the project was to simplify the complex world of calendar management. Some of the core features we had in mind included:

- Turning informal emails into bookings: "Want to meet tomorrow at 2?"

- Listing and rearranging bookings: "Cancel my next meeting"

- Answering basic questions: "How does my Tuesday look?"

and more. In summary, we wanted to build a personal, AI-powered scheduling assistant that can offer a complete suite of CRUD operations to the end user, all within the confines of their email client using natural language.

Architecture

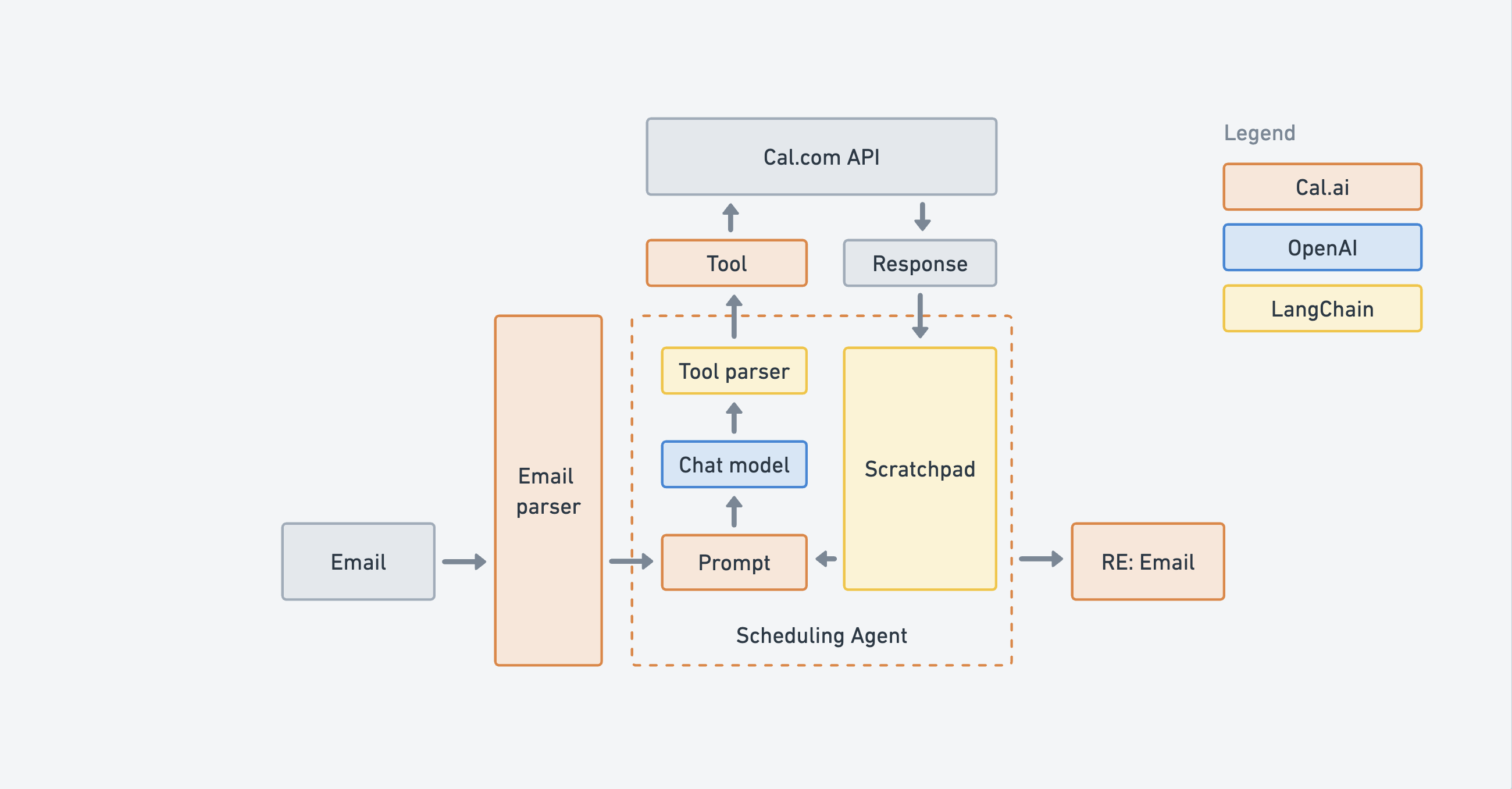

We decided to achieve this using an AI agent, particularly an OpenAI functions agent which is better at dealing with structured data, given Cal.com's API exposes a set of booking operations with clear inputs. The underlying architecture is explained below.

Input

A Cal.com user may email username@cal.ai (e.g ted@cal.ai) with a request such as “Can you book a meeting with sarim@rubriclabs.com sometime tomorrow?”.

The incoming email is cleaned and routed in the receive route using MailParser and the addresses are verified by DKIM record, making it hard to spoof them.

Here we also make additional checks, such as ensuring that the email is from a Cal.com user to prevent misuse. After the email has been verified and parsed, it is passed to the agent loop.

Agent

The agent is a LangChain OpenAI functions agent which uses fine-tuned versions of GPT models. This agent is passed pre-defined functions or tools and is able to detect when a function should be called. This allows us to specify the required inputs and desired output.

The agent is documented in the agent loop.

There are two required inputs for an OpenAI functions agent:

- Tools — a tool is simply a Javascript function with a name, description, and input schema

- Chat model — a chat model is a variation of an LLM model that uses an interface where "chat messages" are the inputs and outputs

In addition to the tools and the chat model, we also pass a prefix prompt to add context for the model. Let’s look into each of the inputs.

Prompt

A prompt is a way to program the model by providing context. The user’s information (userId, user.username, user.timezone, etc.) is used to construct a prompt which is passed to the agent.

const prompt = `You are Cal.ai - a bleeding edge scheduling assistant that interfaces via email.

Make sure your final answers are definitive, complete and well formatted.

Sometimes, tools return errors. In this case, try to handle the error

intelligently or ask the user for more information.

Tools will always handle times in UTC, but times sent to users should be

formatted per that user's timezone.

The primary user's id is: ${userId}

The primary user's username is: ${user.username}

The current time in the primary user's timezone is: ${now(user.timeZone)}

The primary user's time zone is: ${user.timeZone}

The primary user's event types are: ${user.eventTypes}

The primary user's working hours are: ${user.workingHours}

...

`find the full prompt here.

Chat Model

A chat model is a variation of a language model which exposes chat messages as the input and output. For this project, we use the GPT-4 model by OpenAI which can be accessed using an OPENAI_API_KEY (read here to generate an API key).

For better adoption, we knew that the user experience had to be lightning fast. We experimented with gpt-3.5-turbo but, interestingly, it would often take longer overall by completing tasks in more roundabout ways.

The temperature is set to 0 to generate more consistent output. A higher temperature leads to a more creative, inconsistent output.

const model = new ChatOpenAI({

modelName: "gpt-4",

openAIApiKey: env.OPENAI_API_KEY,

temperature: 0,

});Tools

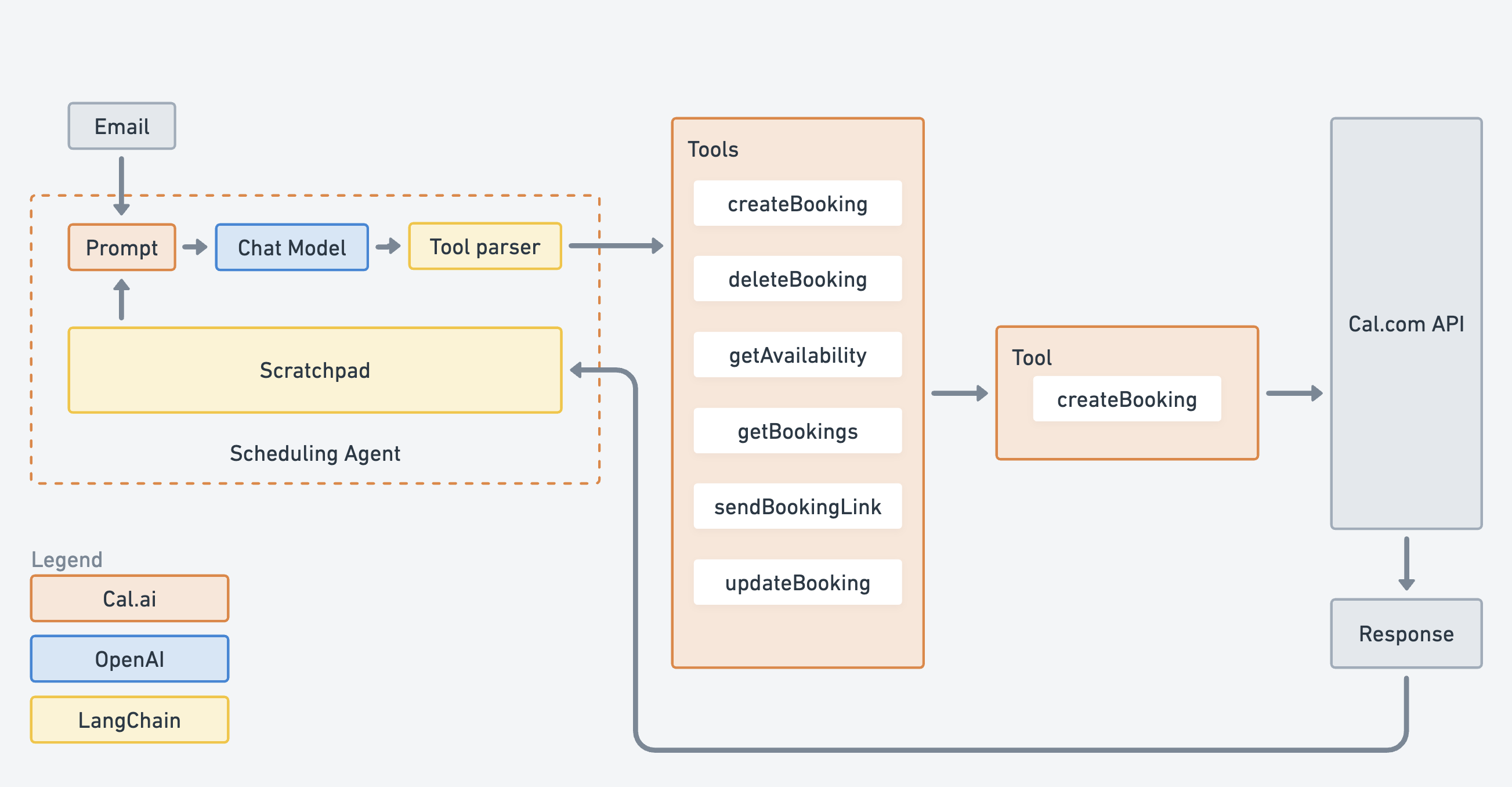

A tool is composed of a Javascript function, name, input schema (using Zod), and description, and is designed to work with structured data.

The tools used for this project are of type DynamicStructuredTool which are designed to work with structured data. A dynamic structured tool is an extension of LangChain’s StructuredTool class which basically overrides the _call method with the function provided. See the schema below for a DynamicStructuredTool.

DynamicStructuredTool({

description: "Creates a booking on the primary user's calendar.", // Description

func: createBooking(), // Function

name: "createBooking", // Name

schema: z.object({ // Schema

from: z.string().describe("ISO 8601 datetime string"),

...

}),

});Inputs for a DynamicStructuredTool, the complete example can be seen here

By creating a suite of tools, we are giving the agent extra capabilities to perform pre-defined actions. All tools used for this project are documented here and listed below. Each tool has complex logic that allows it to understand the user’s request, interact with the Cal.com API by performing a CRUD task, and handle the response.

const tools = [

createBooking(apiKey, userId, users),

deleteBooking(apiKey),

getAvailability(apiKey),

getBookings(apiKey, userId),

sendBookingLink(apiKey, user, users, agentEmail),

updateBooking(apiKey, userId),

];The inputs to the tools above are variables we don’t want the AI agent to have access to and thus, they are injected to the tool, bypassing the agent loop.

Using the dynamic prompt and the chat model, the tool parser is able to select the most appropriate tool to handle the user’s request and then run in a loop until it’s able to meet the desired behaviour. This follows iterative learning, even allowing fallbacks by picking an alternative tool in case there is an error.

Executor

Finally, the tools, chat model, and prompt are used to initialize an agent and run the executor to generate a response.

const executor = await initializeAgentExecutorWithOptions(tools, model, {

agentArgs: { prefix: prompt },

agentType: "openai-functions",

verbose: true,

});Response

After the agent runs through a loop utilizing the different tools, chatting with LLM model and interacting with the Cal.com API, it comes to an end and concludes with a final response. This response is then emailed to the user using an emailing service, such as Sendgrid or Resend. The email will either be a confirmation or an error.

Tech Stack

Conclusion

It’s clear that AI agents with scoped tools is a really powerful combination. Combining the knowledge of an LLM model with structured tools helps tackle natural language problems with structured data, increasing the reliability of said agents. We’re extremely excited about the future of AI agents as they continue to evolve and hope that our partnership with Cal.com and LangChain to create an open-source agent in production will serve as a template for future projects.