We’ve got a new member in the LangChain product family! LangGraph Cloud is taking its first steps today — so read on to see why it’s interesting for agent workflows, how it’s different than LangGraph, and how to sign up for the beta. Plus, get all the usual integration & community updates below.

Product Updates

Highlighting the latest product updates and news for LangChain, LangSmith, and LangGraph

☁️ LangGraph Cloud: Deploy at scale, monitor carefully, iterate boldly

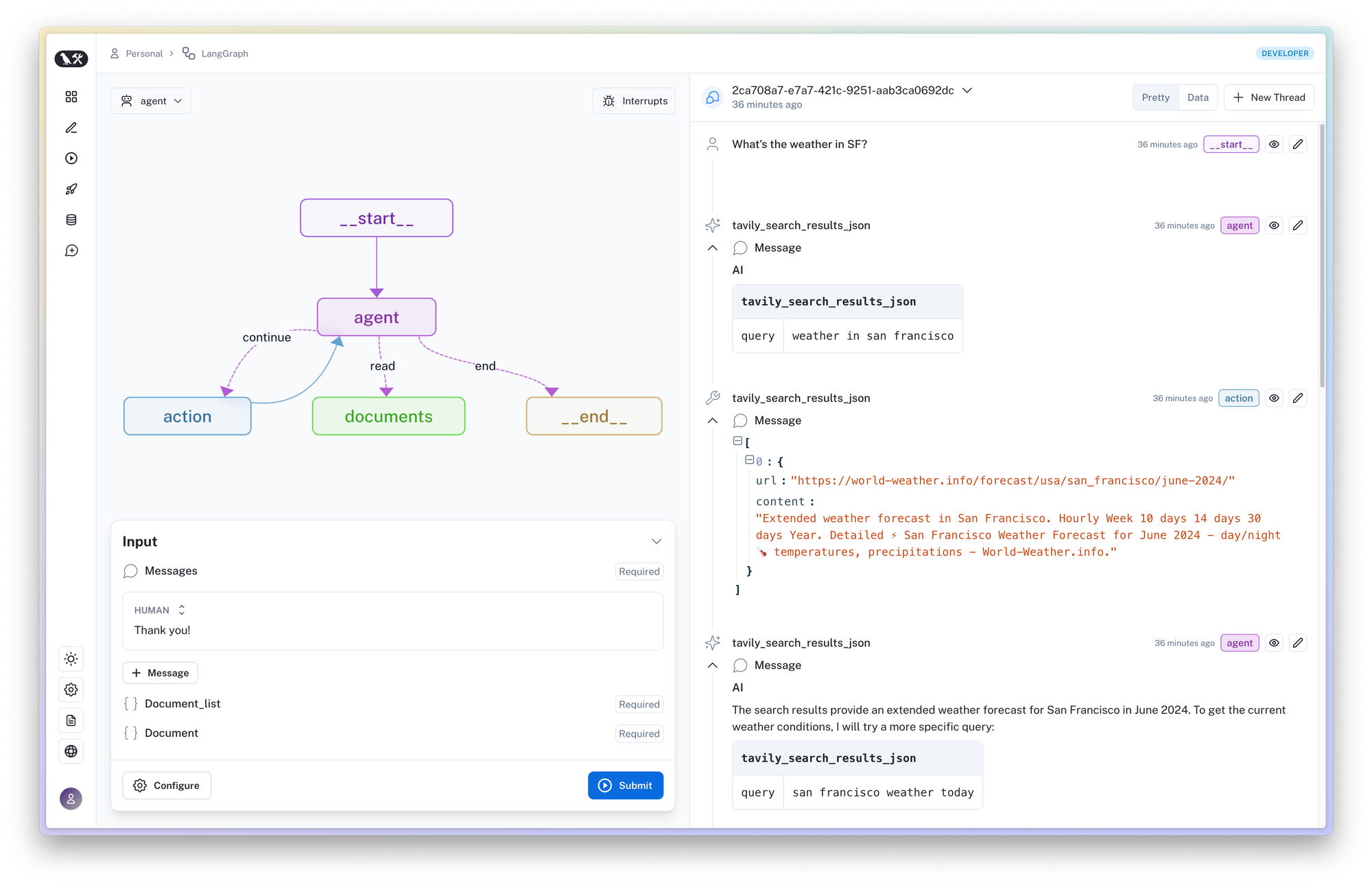

LangGraph Cloud is now in closed beta, offering scalable, fault-tolerant deployment for LangGraph agents. Deploy in one-click and get an integrated tracing & monitoring experience in LangSmith too. LangGraph Cloud also includes a new playground-like studio for debugging agent failure modes and quick iteration:

This builds upon our latest stable release of LangGraph v0.1, which gives you control in building agents with support for human-in-the-loop collaboration (e.g. humans can approve or edit agent actions) and first-class streaming support.

Join the waitlist today for LangGraph Cloud. And to learn more, read our blog post announcement.

🔁 Self-improving LLM evaluators in LangSmith

Using an “LLM-as-a-Judge” is a popular way to grade outputs from LLM applications. This involves passing the generated output to a separate LLM and asking it to judge the output. But, making sure the LLM-as-a-Judge is performing well requires another round of prompt engineering. Who is evaluating the evaluators? 😵💫

LangSmith addresses this by allowing users to make corrections to LLM evaluator feedback, which are then stored as few-shot examples used to align / improve the LLM-as-a-Judge. Improve future evaluation without manual prompt tweaking, ensuring more accurate testing. Learn more in our blog.

More in LangSmith

🥷 PII Masking in LangSmith

Create anonymizers by specifying a list of regular expressions or providing transformation methods for extracted string values. Read the docs.

🛝 Custom models in LangSmith Playground

Once you’ve deployed a model server, use it in the LangSmith Playground by selecting either ChatCustomModel or the CustomModel provider for chat-style model or instruct-style models. See the docs.

🧷 Store the model and configuration when saving a prompt

When you save a prompt in LangSmith, the model and its configuration will also be stored. You no longer need to reset your preferences in the Playground settings each time you open the prompt, making testing prompts easier.

LangChain

🪄 Initialize any model in one line of code

With a universal model initializer in LangChain Python, you can use any common chat model without having to remember different import paths and class names. See the how-to guide.

✂️ Trim messages with transformer utils

Trim, merge, and filter messages easily with a new trim_messages util — especially useful for stateful or complex applications (e.g. for those built in LangGraph). See the how-to guide.

Upcoming Events

Meet up with LangChain enthusiasts, employees, and eager AI app builders at the following IRL event(s) this coming month:

🐻 July 10 (Austin, TX): Austin LangChain Users Group meetup. Want to get a LangChain 101 quickstart, hear practical tips on LangChain, or see cool projects from our latest NVIDIA competition? Head to our mixer in Austin to learn and meet other AI enthusiasts. Sign up here.

Collaboration & Integrations

We 💚 helping users leverage partner features in the ecosystem by using our integrations

Anthropic Sonnet 3.5 integration

With a better, faster, and cheaper model from Anthropic, you can try (Python docs) (JavaScript docs)

Fine-tuned tool calling with Firefunction-v2 by Fireworks

With a new open weights model built on Llama 3-70b, Firefunction-v2, you can do tool calling, build an agent, and benchmark easily. Check out the video and cookbook.

Fully-local function calling with llama.cpp

Our llama.cpp integration now supports tool calling and structured outputs. Learn about its usage with a fine-tuned 8b parameter llama3 model in this video.

Vocode integration with LangChain

You can now create custom agents for building voice-based LLM apps with LangChain and Vocode. Read the docs.

Thanks Redpoint for naming LangChain to their 2024 InfraRed 100!

Speak the Lang

Real-life use cases and examples of how folks have used LangChain, LangSmith, or LangGraph to build LLM apps with high-quality and accuracy — even in production

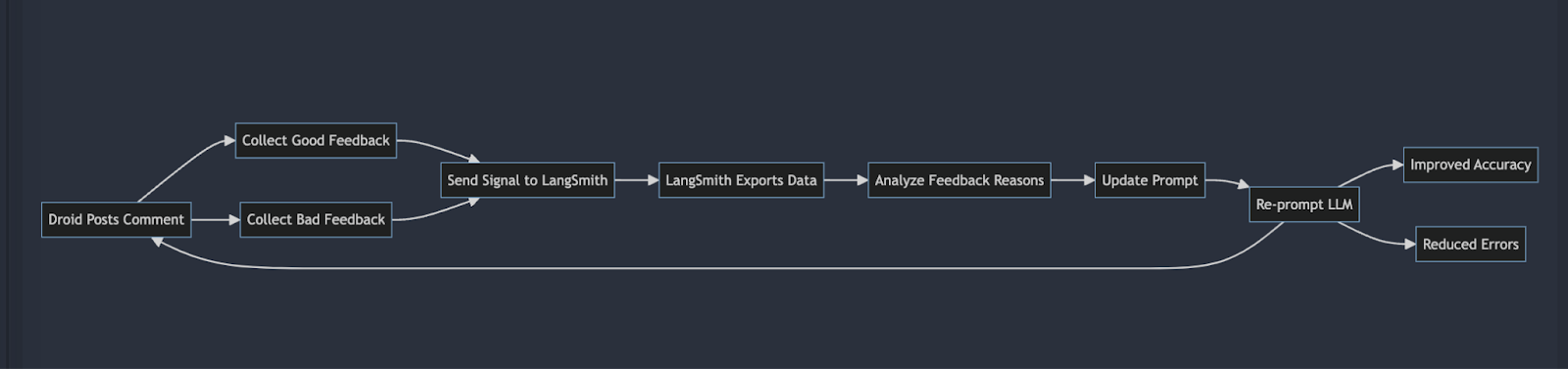

Optimizing & Testing LLMs

Read our case study on how Factory improved iteration speed 2x with LangSmith. Eno Reyes (CTO of Factory) explains how, by leveraging LangSmith’s in-depth observability, they’ve accelerated software development. Learn how they automated their feedback loop for their Droids, hitting state-of-the-art performance benchmarks while maintaining enterprise-level security.

In addition to gaining reliable feedback, knowing how to run standardized evals on your AI workflow is crucial. Adam Lucek (Tech Innovation Specialist @ Cisco) goes over this in his LLM & AI Benchmarks video and code.

Agents

Productionizing LLM-powered automated agents is challenging. With improved tool-calling LLMs and agent orchestration tools, developers need systematic ways to evaluate agent performance. In this 3-part video series by Lance Martin, learn how to assess end-to-end performance of an agent (video 1), single step of an agent (video 2), and agent trajectory of steps (video 3).

You can also reference the conceptual guide and tutorial on agent evaluations.

Our CEO, Harrison, also spoke about agents on the latest episode of “Training Data” from Sequoia Capital. What is an agent? What’s the past/present/future of agents in the LLM space? These questions (and more) are discussed — give it a listen on Spotify or Apple Podcast.

And for even more examples of agents in action:

- Complete customer verification flow with LangGraph & Mistral AI video and code from Eden Marco (LLM Specialist @ Google Cloud)

- Building and deploying a MinIO-powered LangChain agent API blog by David Cannan (DevOps @ MinIO)

- LangGraph 101: Building agents by James Briggs (Founder @ Aurelio AI)

- Multi-agent workflow with LangGraph and LangChain by Moritz Glauner (Head of Data Science) and Lyon Schulz (Data Scientist) @ Bertelsmann Data Services

New to LangChain? Here’s some suggestions

Read this LangChain primer from Lakshya Agarwal (Analytics @ McGill) or watch the LangChain Masterclass for Beginners from Brandon Hancock (Full Stack Engineer @ Saltbox). His video walks through 20+ real code examples on how to use LangChain to build powerful AI applications — from integrating chat models, to creating RAG chatbots, and automating workflows with chains.

How can you follow along with the Lang Latest? Check out the LangChain blog and YouTube channel for even more product and content updates. For any additional questions, email us at support@langchain.dev.

![[Week of 6/24] LangChain Release Notes](/content/images/size/w760/format/webp/2024/06/6-24-Release-Notes---Ghost.png)