TL;DR:

- We’re excited to announce a new syntax to create chains with composition. This comes along with a new interface that supports batch, async, and streaming out of the box. We’re calling this syntax LangChain Expression Language (LCEL)

- We've created a "LangChain Teacher" to help teach you LCEL (assumes LangChain familiarity)

- We'll be doing a webinar on 8/2 about this and how to use it

- This is aimed at making it easier to construct complex chains, and pairs nicely with LangSmith - the platform we recently released aimed at making it easier to go from prototype to production.

The idea of chaining has proven popular when building applications with language models. Chaining can come in a few different forms, each with their own benefits. Some examples of these are:

Making Multiple LLM Calls

Chaining can mean making multiple LLM calls in a sequence. Language models are often non deterministic and can make errors, so making multiple calls to check previous outputs or to break down larger tasks into bite-sized steps can improve results.

Constructing the Input to LLMs

Chaining can mean combining data transformation with a call to an LLM. For example, formatting a prompt template with user input or using retrieval to look up additional information to insert into the prompt template. This is necessary because you often need data from multiple sources to perform a task, which may be fetched at runtime conditional on the input.

Using the Output of LLMs

Another form of chaining refers to passing the output of an LLM call to a downstream application. For example, using the LLM to generate Python code and then running that code; using the LLM to generate SQL and then executing that against a SQL database.

There’s also something about working with language models that makes the idea of chaining appealing. Sure, all the above operations could be done with code, but people have gravitated towards the idea of chaining - as evidenced by the multitude of low-code/no-code platforms for building language model applications (some like Flowwise and LangFlow built on top of LangChain). Why? It’s become a bit of a meme, but if text is the universal interface, and all of these operations involve manipulation of text, then this sets itself up incredibly naturally for an expression language to support this.

LangChain was born from the idea of making these types of operations easy. We saw people doing common patterns and factored them out into pre-built chains: LLMChain, ConversationalRetrievalChain, SQLQueryChain.

But these chains weren’t really composable. Sure - we had SequentialChain, but that wasn’t amazingly usable. And under the hood the other chains involved a lot of custom code, which made it tough to enforce a common interface for all chains, and ensure that all had equal levels of batch, streaming, and async support.

Today we’re excited to announce a new way of constructing chains. We’re calling this the LangChain Expression Language (in the same spirit as SQLAlchemyExpressionLanguage). This is a declarative way to truly compose chains - and get streaming, batch, and async support out of the box. You can use all the same existing LangChain constructs to create them.

We’ve included guides on how to work with the interface as well as some examples of using it. Let’s take a look at one of the more common ways below:

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

model = ChatOpenAI()

prompt = ChatPromptTemplate.from_template("tell me a joke about {foo}")

chain = prompt | model

chain.invoke({"foo": "bears"})

>>> AIMessage(content="Why don't bears ever wear shoes?\n\nBecause they have bear feet!", additional_kwargs={}, example=False)

This uses a standard ChatOpenAI model and prompt template. You chain them together with the | operator, and then call it with chain.invoke. We can also get async, batch, and streaming support out of the box.

Batch

batch takes in a list of inputs. If optimizations can be done internally (like literally batching calls to LLM providers) those are done.

chain.batch([{"foo": "bears"}, {"foo": "cats"}])

>>> [AIMessage(content="Why don't bears ever wear shoes?\n\nBecause they have bear feet!", additional_kwargs={}, example=False),

AIMessage(content="Why don't cats play poker in the wild?\n\nToo many cheetahs!", additional_kwargs={}, example=False)]Stream

stream returns an iterable that you can consume.

for s in chain.stream({"foo": "bears"}):

print(s.content, end="")Async

All of invoke, batch, and stream expose async methods. We only show ainvoke here for simplicity, although you can check out our notebook that deep dives into the interface to see more.

await chain.ainvoke({"foo": "bears"})In our cookbook we’ve included examples of doing this with:

We’ll be constantly beefing up support for this and adding more examples of functionality, so let us know what you’d like to see. We'll also be incorporating this more into LangChain - already the create_sql_query_chain uses this under the hood.

Besides the benefit of adding standard interfaces, another benefit is that this will make it easier for users to customize parts of the chain. Since the chain is expressed in such a declarative and composable nature, it will be much more clear how to swap certain components out. It also now brings the prompts front and center - making it more clear how to modify those. The prompts in LangChain are just defaults, and are largely intended to be modified for your particular use case if you are seriously trying to take an application into production. Previously, the prompts were a bit hidden and hard to change. With LCEL, they are more prominent and easily swappable.

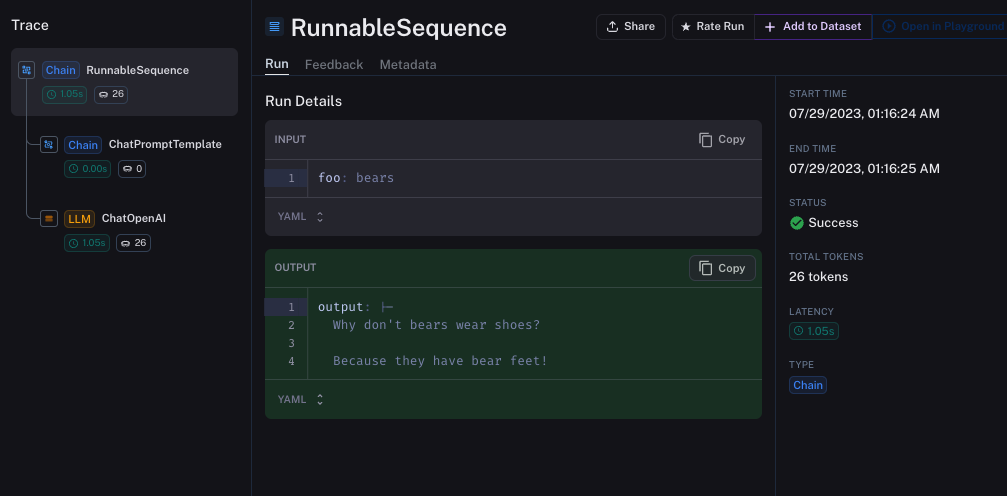

LangChain Expression Language creates chains that integrate seamlessly with LangSmith. Here is a trace for the above:

You can inspect the trace here. Previously, when creating a custom chain there was actually a good bit of work to be done to make sure callbacks were passed through correctly so that it could be traced correctly. With LangChain Expression Language that happens automatically.

We've also tried to make this as easy as possible for people to learn by creating a "LangChain Teacher" application that will walk you through the basics of getting started with LangChain Expression Language. You can access it here. We'll be open sourcing this soon.

We'll also be doing a webinar on this tomorrow. We'll cover the standard interface it exposes, how to use it, and why to use it. Register for that here.

We're incredibly excited about this being an easy and lightweight way to truly compose chains together. If you're excited as well, we're hiring for roles that would work directly on this. The best way to get our attention is to open a PR or two adding more functionality. There's still a lot to build :)