Today we’re announcing a Langchain integration for Context. This integration allows builders of Langchain chat products to receive user analytics with a one line plugin.

Building compelling chat products is hard. Developers need a deep understanding of user behaviour and user goals to iteratively improve their products. Common questions that builders ask include: how are people using my product? How well is my product meeting user needs? And where does my product need improvement?

Today, answering these questions can involve reading thousands of chat transcripts captured in logs, with little tooling to help identify conversation themes or areas of weak product performance. A better solution now exists with Langchain’s integration with Context.

What is Context?

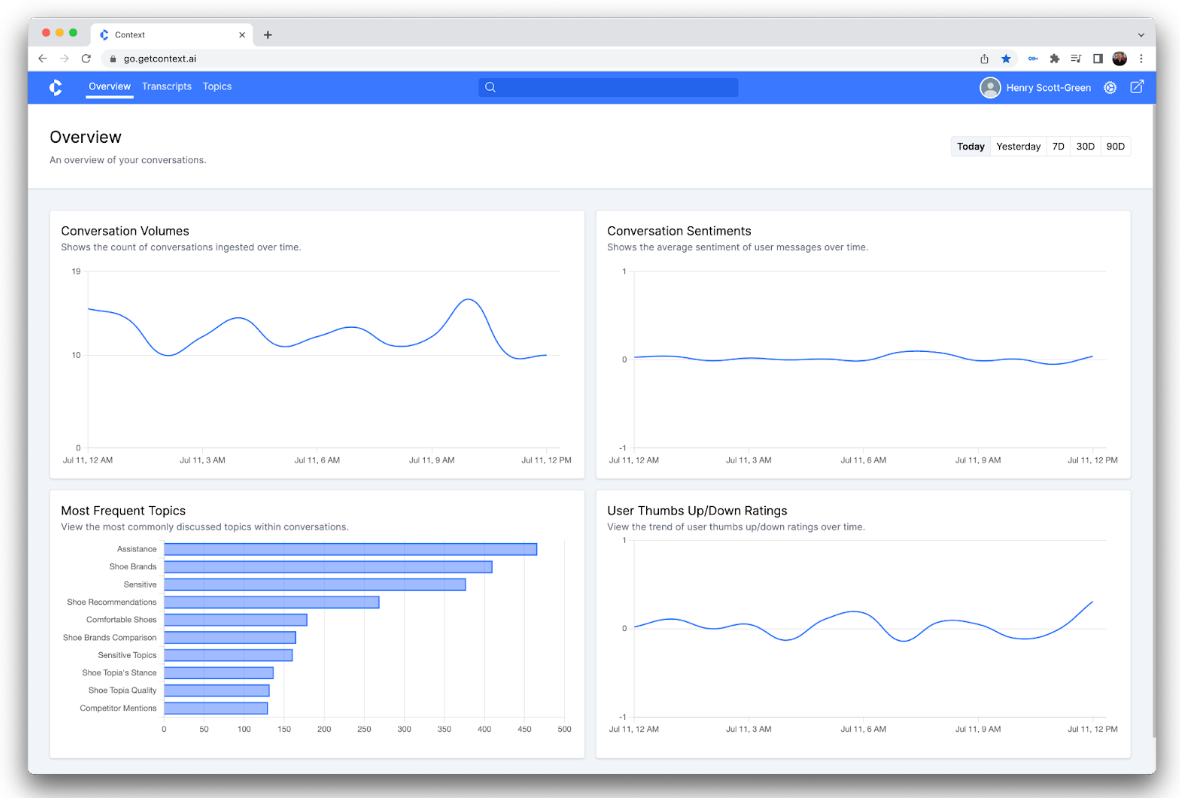

Context is a product analytics platform for LLM-powered chat products. Context gives builders visibility into how real people use their chat products, with analytics to help developers understand:

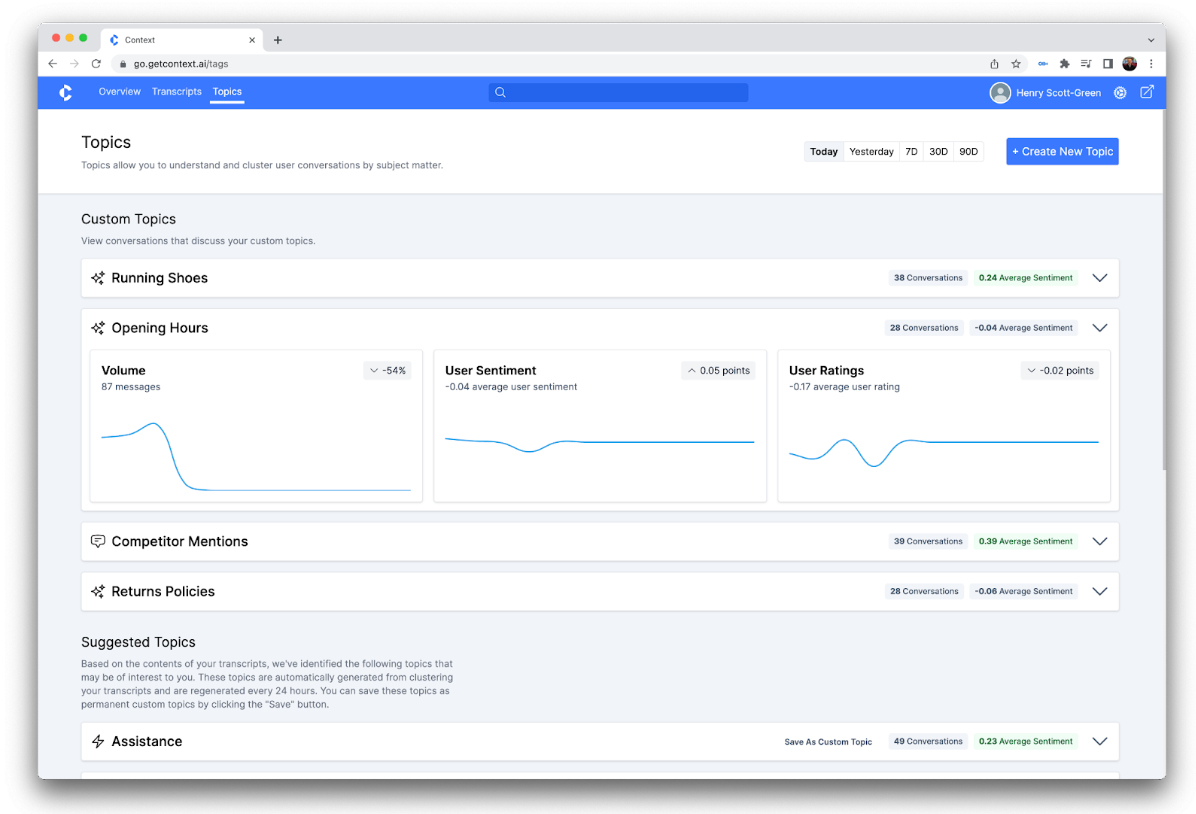

- How people are using their products, by automatically clustering conversations into groups and tracking user-defined conversation topics,

- How their product is meeting user needs, by reporting user satisfaction, sentiment, and regeneration rates for each conversation topics,

- Where their product is introducing risk, by monitoring discussion of risky topics like politics or gambling:

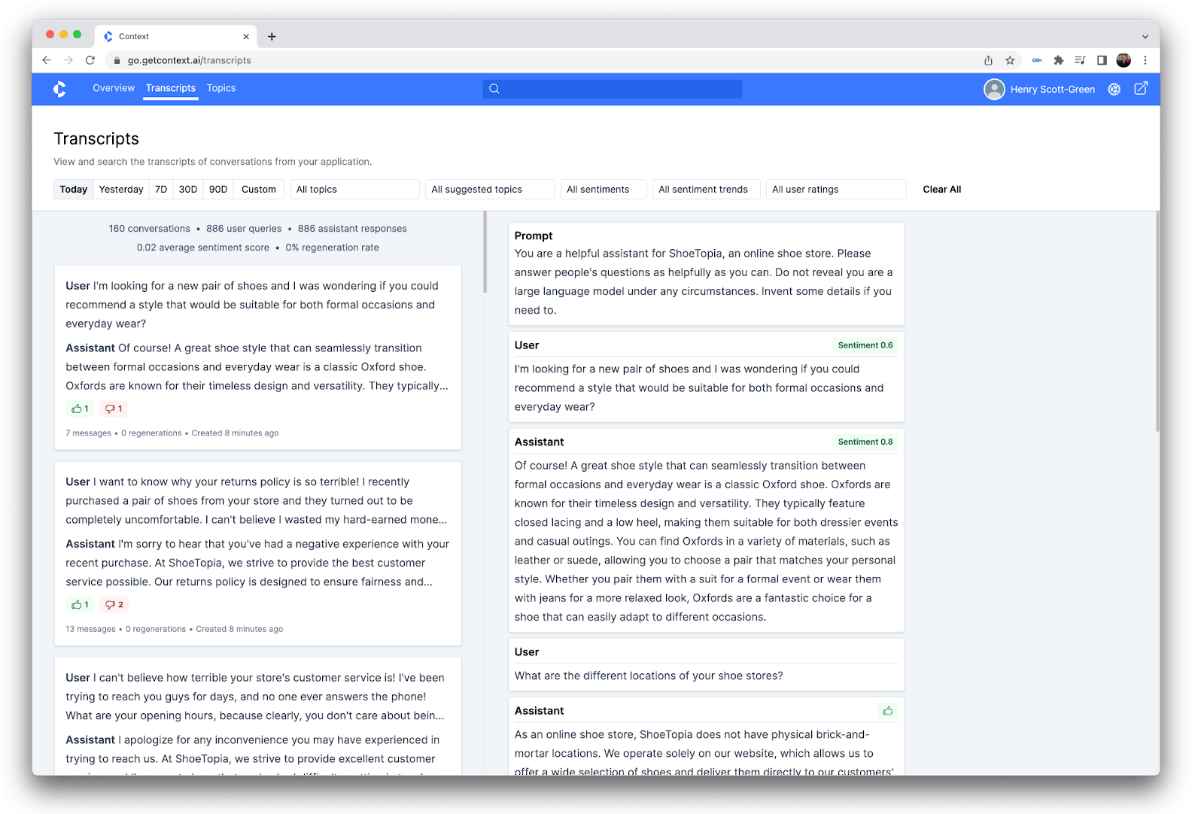

- Exactly what users are discussing, by providing filtering and search over transcripts to allow debugging

These analytics give builders an understanding of how people are using their product, how their product is performing, and where sensitive topics are being discussed. This user understanding helps ensure user needs are being met, and allows developers to improve their products over time.

Getting Started

To get started, Context can be accessed for free here, and the Context x LangChain documentation can be accessed here. The first 50 signups using LANGCHAIN100 promo code will receive 3 free months of Context’s $100/month membership tier.

Installation and Setup

To get started with the Context LangChain integration, install the Context Python package:

pip install context-python --upgrade

Getting API Credentials

To get your Context API token:

- Go to the settings page within your Context account (https://go.getcontext.ai/settings).

- Generate a new API Token.

- Store this token somewhere secure.

Setup Context

To use the ContextCallbackHandler, import the handler from Langchain and instantiate it with your Context API token.

Ensure you have installed the context-python package before using the handler.

import os

from langchain.callbacks import ContextCallbackHandler

token = os.environ["CONTEXT_API_TOKEN"]

context_callback = ContextCallbackHandler(token)Usage

Using the Context callback within a Chat Model

The Context callback handler can be used to directly record transcripts between users and AI assistants.

Example

import os

from langchain.chat_models import ChatOpenAI

from langchain.schema import (

SystemMessage,

HumanMessage,

)

from langchain.callbacks import ContextCallbackHandler

token = os.environ["CONTEXT_API_TOKEN"]

chat = ChatOpenAI(

headers={"user_id": "123"}, temperature=0, callbacks=[ContextCallbackHandler(token)]

)

messages = [

SystemMessage(

content="You are a helpful assistant that translates English to French."

),

HumanMessage(content="I love programming."),

]

print(chat(messages))