Editor’s Note: This blog post was written by Matt Carey, one of the builders of Genoss. We're sharing it on our blog too because we hear from a lot of people that don't want to get locked in to a single model. We think tools like Genoss that provide clean interfaces for interoperability will help more builders get robust apps into production fast. We're also incredibly excited about the integration it has with LangSmith to enable easy debugging, logging, monitoring, and observability!

Introducing Genoss GPT: One line GPT application portability with the Genoss model gateway.

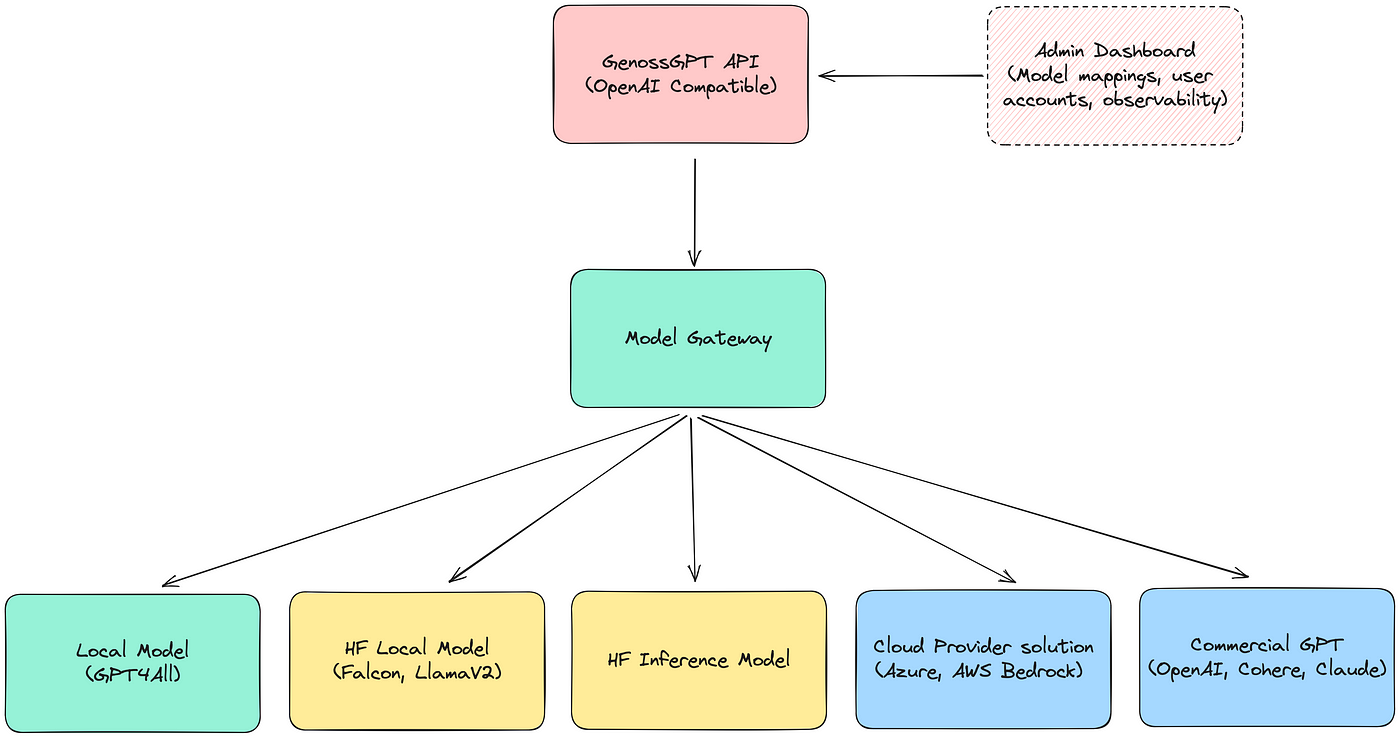

TL;DR: GenossGPT offers a universal interface for interacting with GPT models, simplifying the task of integrating and switching between models in your GenAI-powered application. It gives businesses control over their model usage, while leveraging LangSmith to provide enhanced observability and analytics in production.

Development in the generative AI space continues to pick up steam, with an ever-expanding range of uses being found for large language models (LLMs). These uses span diverse domains including personal assistants, document retrieval, as well as image and text generation. Groundbreaking apps like ChatGPT have paved the way for companies to dive into the space and start building with this technology.

Big players are building their own models such as AWS with their Titan models, Meta with their newly released Llama 2, and Microsoft with their partnerships with both OpenAI and Meta, showing that there is serious investment in the space.

Unicorn startups like Hugging Face, raising money at a $4 billion valuation, have dominated the open-source space and allow anyone to deploy models with ease. Universities will continue to push the envelope of what is possible on a budget, and these innovations will feed back into open-source projects. However, it is unlikely that smaller companies will be able to compete with big tech giants in building production LLMs from scratch due to the high costs.

Ecosystems like LangChain provide a set of helpful utilities for developers interacting with these third-party LLMs, or foundational models as they are often called. LangChain classes help developers leverage models with I/O and memory, as well as providing model chains to accomplish specific tasks such as document retrieval. Additionally, LangChain establishes a standard vocabulary, introducing terms like ‘RetrievalChain’ and ‘MemoryStore’ with well-defined meanings in the ecosystem, thereby streamlining discourse among AI engineers.

The integration problem

A discernible gap, still resides in the current tooling designed to facilitate integrations with LLM providers. As newer and improved models are introduced and the technology progresses, engineers want the freedom to be able to quickly implement new features with these models.

LangChain provides classes for a huge amount of models, such as OpenAI and Hugging Face, based on a common LLM class. However it requires using different classes for each provider. These classes have various methods and properties and although you would hope they are directly plug and play, the actual solution is rarely that simple.

Factors such as model streaming and nested chains further complicate the problem. LangChain does a great job at building interoperability between composable pieces (e.g. VectorStores, DocumentLoaders & Retrievers) but we need to improve the developer experience when it comes to switching between LLM models.

Genoss is the solution

Genoss is a model gateway powered by LangChain. It standardises the process of calling any supported LLM into one unified interface, compatible with the OpenAI API specification. By simply changing your base URL to your Genoss endpoint, you have the power of any LLM model at your fingertips. Direct integration with any 3rd party tool that supports the OpenAI interface is enabled out of the box.

Genoss does the heavy lifting for you to provide a single cohesive interface for interacting with both Completions and Embeddings models across multiple providers. You can switch between local open-source models, OpenAI models, AWS Bedrock models or any Hugging Face model with just a simple change to the model name.

When using Genoss in an enterprise setting, an admin can update the model with a singular change on the dashboard (coming soon). There is no need to update any application code to point towards new providers, the mapping is handled entirely by Genoss. This is useful for applications which use different models for different tasks or even users tiers. Paid users of your application could be given access to a choice of models with varying benefits such as enhanced privacy or fine-tuning using specific knowledge, whereas demo users would use a generic and cheaper model.

Usage in production

Genoss is open-source and is free to use via the hosted version or to self-host on your own infrastructure. Think of Genoss like Supabase for LLM applications. It empowers developers to go from a weekend side-project straight to production using the same infrastructure and tech stack.

Genoss leverages LangChain’s new tool, LangSmith. LangSmith provides observability, debugging and testing on the inputs and outputs of the models at each stage of the workflow. Analytics of LLM calls can be performed to help improve the usage and effectiveness of the model and the prompts. This allows companies to run LLM based workflows in production with higher confidence in their models and their outputs than ever before.

When using Genoss as your model gateway, LangSmith is automatically setup and ready to use. Companies can also use LangSmith to track token usage, manage costs of their LLM calls and keep an eye on latencies directly from the Genoss console.

Conclusion

The lack of consistency between model interfaces makes building with LLMs unnecessary complicated. As cloud providers, commercial and open-source models are not aligned on a standard interface, Genoss provides interface unification. Genoss reduces the learning curve for developers starting to build, and for organisations scaling applications harnessing the power of Generative AI.

Given the rapid pace of LLM advancements, developers will use Genoss to test the latest models, experiment with fine-tuning and run private models locally. Enterprises will use Genoss to control the model usage of their users in both internal and production apps whilst utilising LangSmith for observability, debugging and testing.

Find the code repository for Genoss here. We look forward to seeing what you will build with it.

Thanks to the team Stan Girard, Maxime Thoosen, Arnault Chazareix for all your hard work.