We’ve been busy shipping 🚢! Next Thursday Feb 15th at noon PT, LangChain Co-founder Ankush Gola will host a webinar to share some exciting updates on LangSmith, and we promise everyone will have access to the platform by then! Sign up here to join and ask questions about LangSmith.

🦜⚒️ New in LangSmith

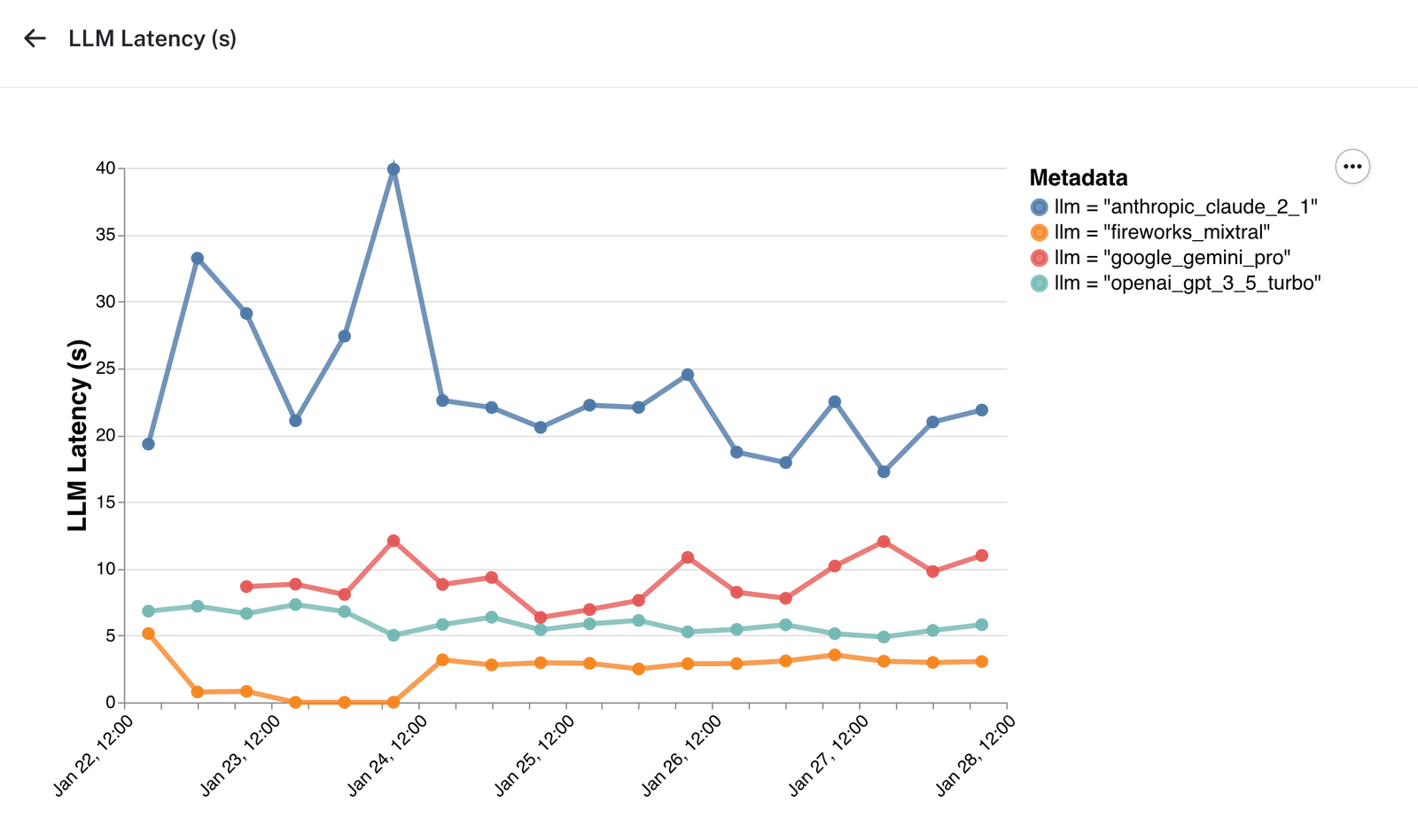

- Better Monitoring. We’re excited to launch grouped monitoring charts in LangSmith by metadata and by tag. It’s now easier than ever to see how different segments are performing or to view a specific customer’s experience in your product. Read how to set up tags and metadata, and learn about the kinds of visibility this feature unlocks on the blog.

- Cost visibility. Cost tracking is here!! We’ve tracked total tokens for awhile, and now users can see how much they’ve spent on LLM calls in their traces and in the monitoring tab. This is huge for understanding tradeoffs and ROI of your application. We’re planning to let users add custom rates too, so if you have special pricing, LangSmith can still give you an accurate view of spend.

- Regression Testing. Charts are now available in the testing view, so you can now see how performance changes visually on different iterations of your application. Spot regressions or improvements faster.

- Annotation Queues Feedback Rubric. We’ve added a structured feedback rubric to help make annotations easier. This feature enables users to create custom feedback keys with predefined categories. The rubric will show up across your LangSmith organization in annotation queues, and feedback will then be validated on submission.

- Trace Sampling. We’ve made some LangSmith SDK changes too with auto-batching for better performance and trace sampling. Go ahead and upgrade to the python SDK version >= 0.0.84 and JS SDK version >= 0.0.64 to check it out.

- Support for HuggingFace 🤗 models in the Playground.

👀 Taking another look at LangChain Open GPTs

We launched Open GPTs a couple months back in response to OpenAI’s GPTs. Open GPTs is an open source application builder that lets you choose your own models to run and gives you more flexibility when it comes to cognitive architecture. We’ve been working closely with a few enterprise partners to improve OpenGPTs for their needs, and we want to share a bit more about how the platform works under the hood. Check out our blog post where we dive into:

- MessageGraph: A particular type of graph that OpenGPTs runs on.

- Cognitive architectures: What the 3 different types of cognitive architectures OpenGPTs supports, and how they differ.

- Persistence: How persistence is baked in OpenGPTs via LangGraph checkpoints.

- Configuration: How we use LangChain primitives to configure all these different bots.

- and more...

And check out the demo in which Harrison does a deep-dive on the platform.

🧠 Better RAG

We took a look at two popular papers Self-RAG and C-RAG that introduce the idea of using an LLM to self-correct poor quality retrieval and / or generations to improve the overall quality of a RAG system. We thought LangGraph could easily be used for "flow engineering" to reflect and improve the strategy. Check out two cookbooks we created to implement the ideas in the papers, and read more about it on our blog.

We also launched a new YouTube series around RAG: RAG from Scratch! Lance from LangChain (that has a nice ring to it 😉) breaks down RAG concepts, starting with the basics. We’ll add more educational content in the coming weeks.

🕸️ LangGraph

We’ve already mentioned LangGraph a few times - and that’s because we’re really excited about it! We’ve added a bunch of examples and some new functionality this past week:

- New feature: persistence (YouTube, notebook). Easily save your interactions with agents!

- New example: WebVoyager (YouTube, notebook). Create an agent that can browse the internet!

- New example: updated OpenGPTs to use LangGraph

- New example: RAG with LangGraph (Self-RAG, Corrective RAG)

Catch up on the full repo here, and start the YouTube series here.

🤓 Stay in the Know

- LangChain.js reaches 1M downloads in January 🥳

- Case studies with Elastic and CommandBar

- Meet Connery: An Open-Source Plugin Infrastructure for OpenGPTs and LLM apps

😍 From the Community

- LangChain Support for Workers AI, Vectorize and D1 by Ricky Robinett, Kristian Freeman, and Jacob Lee

- Document Search and Retrieval using RAG by Aniket Maurya

- Building a Wikipedia Chatbot Using Astra DB, LangChain, and Vercel by Carter Rabasa

- Generative AI: An introduction to prompt engineering and LangChain by Janeth Graziani

- Generating Usable Text with AI by Mutt Data

![[Week of 2/5] LangChain Release Notes](/content/images/size/w760/format/webp/2024/02/Blog-post---1.png)