LangChain-Core, LangChain Community, and LangChain v0.1 😃

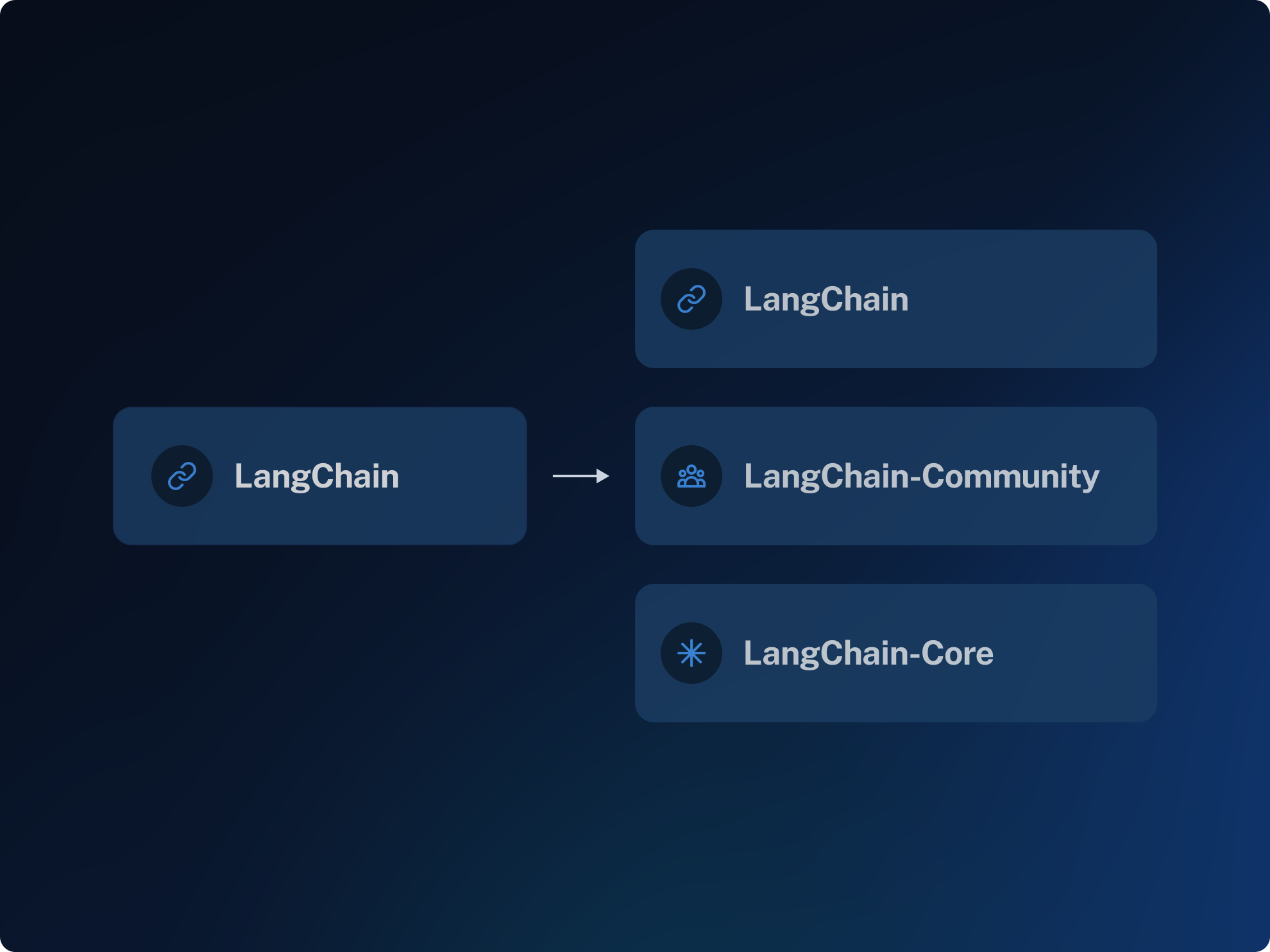

This week we released the first step in a re-architecture of the LangChain package. This splits up the previous langchain package into three different packages:

langchain-core: contains core LangChain abstractions as well as LangChain Expression Language — a truly composable way to construct custom chains.langchain-community: contains third party integrations into all the various componentslangchain: contains chains, agents, and retrieval methods that make up the cognitive architecture of applications

We also announced a path to LangChain v0.1 in early January!

This is a HUGE deal for our users and the community. LangChain is the standard for building LLM-powered apps, and in its ~first year of its existence, the package has changed rapidly to keep pace with the industry. In this next phase of LangChain, we’re committed to creating stable abstractions and leaner packages that companies can depend on and deploy to production. This was a big lift by the team, and we did everything in a backwards compatible way so keep teams shipping quickly with low hassle.

Read more about this split in our blog here.

New in LangChain 💫

- Support for Mistral AI (in JS): We added a package for interacting with Mistral AI chat, streaming, and embedding endpoints. The LangChain implementation of Mistral's models uses their hosted generation API, making it easier to access their models without needing to run them locally. Check out the docs.

- Support for Gemini: You can access Google's gemini and gemini-vision models, as well as other generative models in LangChain now. Excited to see this one go live! Check out the docs.

- ByteStore Support in MultiVectorRetriever: We added persistence capabilities in MultiVectorRetriever using the ByteStore abstraction, which allows you to choose where to store key/value data. Check out the docs or get started with a template.

LangChain Templates 📁

New templates include:

- Proposition-based retrieval: Multi-vector indexing strategy proposed in Dense X Retrieval

- Multi-modal RAG with Google Gemini: A fun multimodal example with gemini-pro-vision

- Multi Vector Retrieval for Multi Modal RAG: multi-modal RAG using Chroma with the multi-vector retriever

- Gmail Agent with OpenAI Functions: read, search through, and draft emails to respond on your behalf 🪄

- Cohere Embeddings Librarian: This is a fun one if you like books — some good holiday reading recommendations 📚 Thank you Billy Trend at Cohere!

Check out all the templates here — great starter code for most common applications and some creative ones too!

In Case You Missed It 👀

Blog Posts

- Multimodal RAG. Check out Lance’s blog post about building a multimodal RAG app to ask questions about slide decks, which are often rich with visual information and graphs. Instead of thumbing through an 100-page presentation, get the information you want instantly.

- Benchmarking RAG of Table Data. Tables are super dense with information, and how you store and retrieve that information to get good results in your RAG app is critical. We tried three different strategies and benchmarked the results to see how different model providers stacked up.

If you’re more of a visual learner, we walk through our findings on this 🎥 YouTube tutorial.

- Extracting Insight from Chat Conversations. From extracting entity data to auto-populating forms and calling external functions, LLM’s are expected to generate high-quality structured outputs. In this blog, we benchmark different LLMs to see how well they fare on extracting useful insights from chat logs.

Case Studies

- Transforming Mortgage Ops. Read about how InstaMorgage improved speed to resolution by an average of 67% and decreased error rates significantly with the help of LangChain and LangSmith 💪

Community Favorites ❤️

- Creating an AI Chatbot to interact with 6,000 tools by Anil Chandra Naidu Matcha

- Introducing the Docugami KG-RAG Template for LangChain: Better Results than OpenAI Assistants by Taqi Jaffri

- Turbocharge RAG with LangChain and Vespa Streaming Mode for Sharded Data by Jo Kristian Bergum

- An LLM Compiler for Parallel Function Calling for an effective plan of executing multiple tasks in parallel at large scale.

- LangChain and Neo4j classes. Hands on training for building with LLMs

- Practices for Governing Agentic AI Systems for creating safety best practices for agents by OpenAI

![[Week of 12/11] LangChain Release Notes](/content/images/size/w760/format/webp/2023/12/5-social.png)