New to LangChain Templates 📁

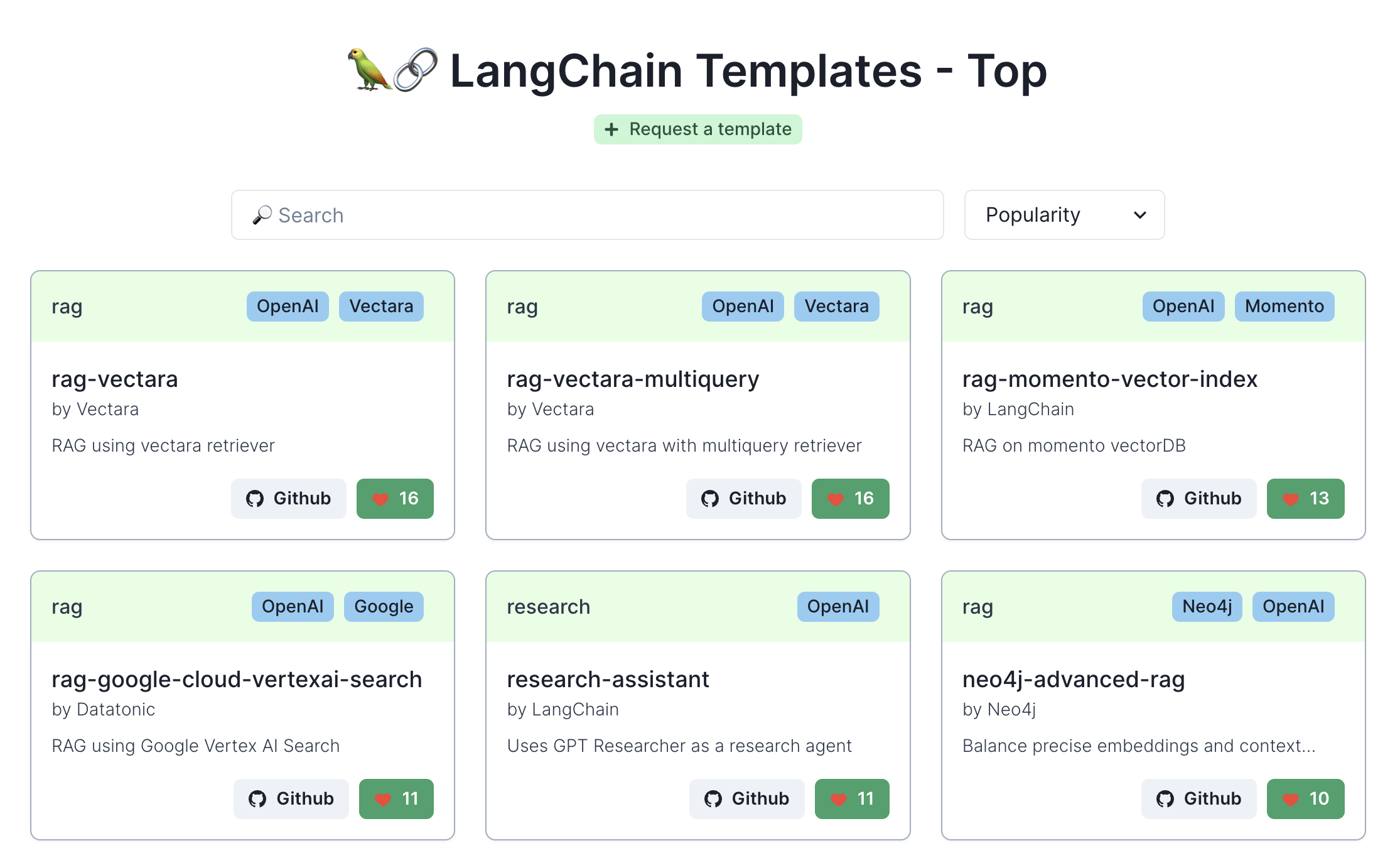

LangChain Templates are the easiest way to get started building GenAI applications. We have dozens of examples that you can adopt for your own use cases, giving you starter code that’s easy to customize. As a big bonus, LangChain Templates integrate seamlessly with LangServe and LangSmith, so you deploy them and monitor them too ❤️.

Check out https://templates.langchain.com/ to see them all!

A few of our most recent favorites are:

- Research assistant: build a research assistant with a retriever of your choice! Inspired by the great

gpt-researcher🙇. - Advanced RAG with Neo4j: sometimes basic RAG doesn’t cut it 😏. We highly recommend this template if you want to experiment with different retrieval strategies. You’ll learn about different techniques: traditional, parent retriever, hypothetical questions, and summarization.

- Extraction with Anthropic Models: use prompting techniques to do extraction with Anthropic models.

Coming soon: Hosted LangServe 🦜🏓

We’re hard at work building Hosted LangServe. This service will make it super easy to deploy LangServe apps and get tracking in LangSmith. Sign up to get notified for early access here. We’re also eager to hear about what kind of deployment challenges you’re having with LLM-powered apps. Feel free to hit reply, and let us know how we can support you better!

We also collaborated with Google to make it dead simple to deploy LangServe apps on Cloud Run — read about it here.

7 Day of LangSmith 🦜⚒️

In case you missed it, we put together a LangSmith demo series to highlight how the platform can help you build production-ready gen AI applications faster and more reliably!

Check out these 7 videos, each <10 min, and you'll be a LangSmith pro in under an hour 💪.

LangChain Benchmarks 📊

LangChain benchmarks is a python package and associated datasets to facilitate experimentation and benchmarking of different cognitive architectures. Each benchmark task targets key functionality within common LLM applications, such as retrieval-based Q&A, extraction, agent tool use, and more.

For our first benchmark, we released a Q&A dataset over the LangChain python documentation. See the blog post here for our results and instructions on how to test your own cognitive architecture.

Helpful resources 🔗: docs, repository, Q&A leaderboard.

OpenGPTs ✨

After OpenAI’s DevDay, we launched a project inspired by GPTs and the Assistants API. It’s called OpenGPTs, and the project implements similar functionalities but in an open source manner — giving you ultimate flexibility of what language model, vector store, tools, and retrieval algorithm are used.

Helpful resources 🔗:

In case you missed it

- Blog Posts:

- OpenAI’s Bet on a Cognitive Architecture. Get Harrison’s take on the future of cognitive architectures and why it should matter to companies.

- Adding Long-Term Memory to OpenGPTs. Read more about why long term memory is under-explored in LLM apps and the details behind how we implemented it for our Dungeons and Dragons 🐉 OpenGPT app.

- Deconstructing RAG. A overview of all the components that go into retrieval augmented generation.

- Case Studies:

- How Adyen Uses LangChain. Learn how Adyen uses LangChain and LangSmith to help with customer support functions.

- How Morningstar Uses LangChain. Learn how Morningstar uses LangChain to power their Morningstar Intelligence Engine.

- LangChain 🤝 Microsoft:

- LangChain Expands Collaboration with Microsoft. We’re excited about this and our mutual customers should be too.

- YouTube Demos:

Community Favorites

- “Needle in a Haystack” Visualizations for long context windows by Greg Kamradt

- Querypls: Prompt to SQL OSS implementation by Abdul Samad Siddiqui

- Hybrid Retrieval by Leonie Monigatti

- Building an LLM Application for Document Q&A Using Chainlit, Qdrant and Zephyr by Plaban Nayak

- AI Driven Consultant by Gil Fernandes

- Docker Template for Research Assistant by Joshua Sundance Bailey

A Peak at LangChain Core 0.1 🫣

langchain-coreversion 0.1 is on its way! Follow the GitHub discussion here: https://github.com/langchain-ai/langchain/discussions/13823

See you in a couple weeks!

![[Week of 11/27] LangChain Release Notes](/content/images/size/w760/format/webp/2023/12/image--10-.png)